Many healthcare organizations have shifted from paper to digital, but going digital alone does not mean, lesser administrative work, better processes or improved healthcare outcomes. We see that many healthcare organizations struggle with the high amount of unstructured data in their eco-system. With unstructured data, you don’t get insights from your data or can make the needed correlations through the patient journey. To put a number on that, 80 percent of healthcare documentation is unstructured, and therefore this data goes largely unutilized. Going digital means structuring your data so it can be used for better insights, both for clinical and secondary use, that ultimately will improve healthcare outcomes.

Structuring data is mostly done via the EHR, where health care teams enter every symptom, diagnose, medication, … manually in the right format with the right codification in the system. Which leads into a dramatic increase of the administrative burden. This in combination with patient care that is getting more complex, due to the fact that patients live longer with chronically diseases. And ultimately combining this with Covid19 increased workloads, is resulting in burnout symptoms with half of the health care workers today.

Speech to Text

One way to decrease the administrative burden on the health care teams is with Speech to Text technology, where health care workers can dictate the consultation, and every word is being automatically transcribed into the EHR. This is mainly done through Nuance, now part of Microsoft, and the Dragon Medical One software, which features speech recognition and voice-enabled workflow capabilities. It is able to capture clinical documentation as much as five times faster than typing, with 99% accuracy.

When using Speech to Text in a (remote) consultation, you need to know who is talking. Luckily there are companies today, which are able to tackle this complex challenge in real-time. With A.I., they are able to identify and separate speakers in real-time, even when they interrupt each other or when they are speaking at the same time.

The transcribed text is then in most of the cases, analyzed, processed and entered in the EHR, by people who codify every consultation in the organization. As you can imagine, this manual codification of the transcription can be expensive, does not scale and sometimes leads into losing context of the conversation. So how can we make better use of automated transcribed output?

Natural Language Processing (NLP)

Natural language processing or NLP is the ability for computers to understand human speech terms and text. The adoption of this A.I. technology in healthcare is rising because of its proven ability to search, analyze and interpret huge amount of unstructured patient data. By harnessing this specific type of machine learning, which learned from previous healthcare data, NLP has the potential to harness clinical insights from data that was previously buried in text form. This brings incredible potential and opportunities into understanding healthcare quality, improving processes, and providing better outcomes for patients.

How is NLP being used today

In the U.S., we already use Speech to Text combined with NLP through Nuance and its DAX solution. DAX is a comprehensive, AI‑powered, voice‑enabled, Ambient Clinical Intelligence (ACI) Solution. DAX automatically documents patient encounters accurately and efficiently at the point of care. Which is delivering better healthcare experiences for providers and patients. Unfortunately, this is not yet available in Europe due to complex language models and tailoring for other markets.

Nevertheless, this has not stopped me to work with customers and partners to utilize NLP in Europe. In the last years I had the privilege to work with different first and third-party solutions. To give some examples, I am working closely with Ramsay Santé and their innovation hub, who is using NLP today, to automatically transform their procedures into payments. Their current way of working was not optimal, and they were looking for a scalable way to improve. For this reason, Ramsay Santé implemented a solution where they combined Speech to Text with a customized NLP engine. For Speech to Text, they utilized Nuance Communications, which is considered as the gold standard for healthcare speech to text. The NLP engine was created by Corti, who created a model that could find the correct tariffications codes from the transcribed text. This all with the goal to decrease the administrative burden and optimize their processes. You can read the full story here: NLP on the fly in Capio Denmark | LinkedIn

Another example is supporting clinicians with their consultations. Many clinicians who do their consultations go from room to room, to consult with their patients. This is often a non-stop jumping in and out from consultation to consultation. Every patient is different, and all data needs to get in the EHR, in a structured way. In almost all of the cases, the clinician is typing more than talking/looking to the patient and most of that data is entered as unstructured text. Some of the clinicians mitigate this with Speech to Text but are still lacking the automatic codification through NLP.

Text Analytics for Health

Text Analytics for health is Microsoft first party healthcare NLP service, offered through the Azure Cognitive Service for Language. This NLP model can be used directly via the Cognitive Services API, or via Containers. With containers you can host the Text Analytics for health API on your own infrastructure. Which is relevant when you have security or data governance requirements that can’t be fulfilled by calling Text Analytics for health remotely. The service, which is currently available in 7 different languages (English, French, German, Portugese, Italian, Spanish and Hebrew) extracts and labels relevant medical information from unstructured texts such as doctor’s notes, discharge summaries, clinical documents, and electronic health records. The result of the NLP model can also be returned in FHIR format, which results in easier integration to the EHR.

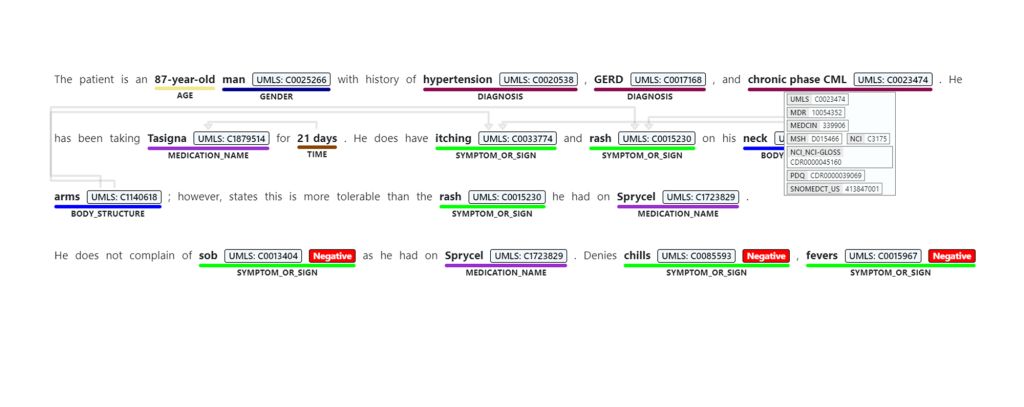

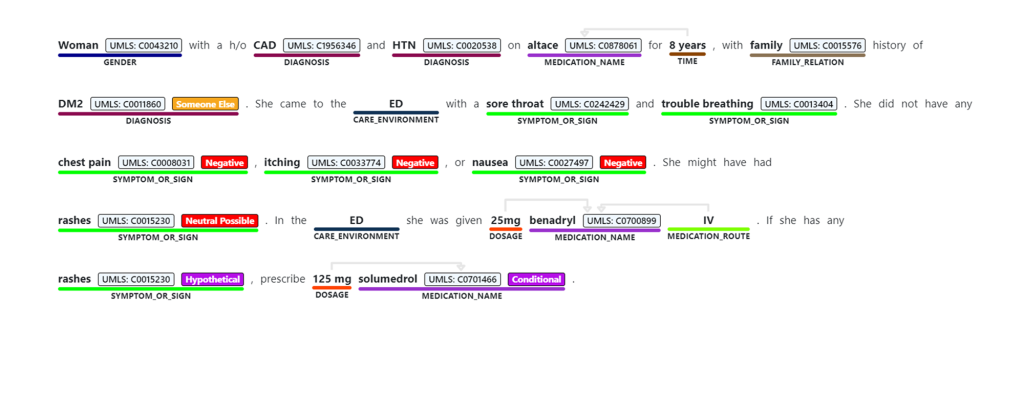

To give some insights into a healthcare NLP model, the Text Analytics for Health model currently supports following features:

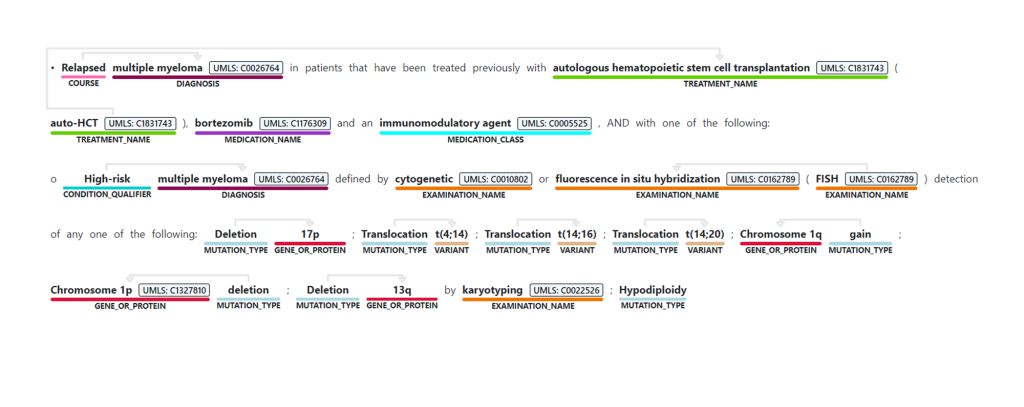

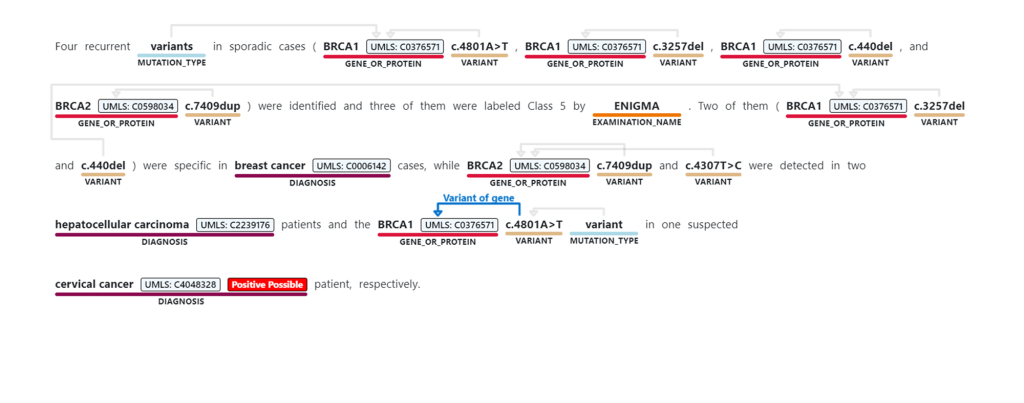

Named Entity Recognition

Named Entity Recognition detects words and phrases in unstructured text that can be associated with one or more semantic types, such as diagnosis, medication name, symptom/sign, or age. There are currently 31 different entity types supported.

Relation extraction

Relation extraction identifies meaningful connections between concepts mentioned in text. For example, a “time of condition” relation is found by associating a condition name with a time or between an abbreviation and the full description. There are currently 35 different Relation types supported

Entity Linking

Entity linking disambiguates distinct entities by associating named entities mentioned in text to concepts found in a predefined database of concepts including the Unified Medical Language System (UMLS). Medical concepts are also assigned preferred naming, as an additional form of normalization.

Assertion Detection

The meaning of medical content is highly affected by modifiers, such as negative or conditional assertions which can have critical implications if misrepresented. Text Analytics for health supports three categories of assertion detection for entities in the text. There are currently 7 Assertion types supported

Partner Solutions

Next to our first party NLP tool, we are also working very closely with leading NLP partners in Europe to support our healthcare customers today. There are several reasons why don’t lead with a single solution. This could be localization requirements, retraining possibilities or tailoring based on specific data. For example, in Belgium we have been working with EarlyTracks , who have a mission to “assist the healthcare sector in the improvement of the quality of their medical records”, their NLP model works in Dutch/Flemish and French. In Denmark we have been working with Corti who provide a digital assistant to support and augment health care teams in improving patient outcomes and internal performances. In Norway we have been working with Anzyz, their Text Analytics enables organizations, cross-industry, to rapidly gain valuable insight from their unstructured text. their solution is 100% self-learning. These are only a couple of the partners we are working with, but it clearly shows that the technology is here, and partners are starting to build qualitative models, together with customers, to improve healthcare at scale.

Supporting our Nurses

In the last years, together with Panton, we have been interviewing nurses and nurse managers from different hospitals and countries. The goal was to better understand and map out the Day in the Life of a nurse. The result of these workshops was an extended document with insights in their current way of working and the challenges that come with that. Based on these insights, we brainstormed on how potential solutions could look like and created many different mock-ups.

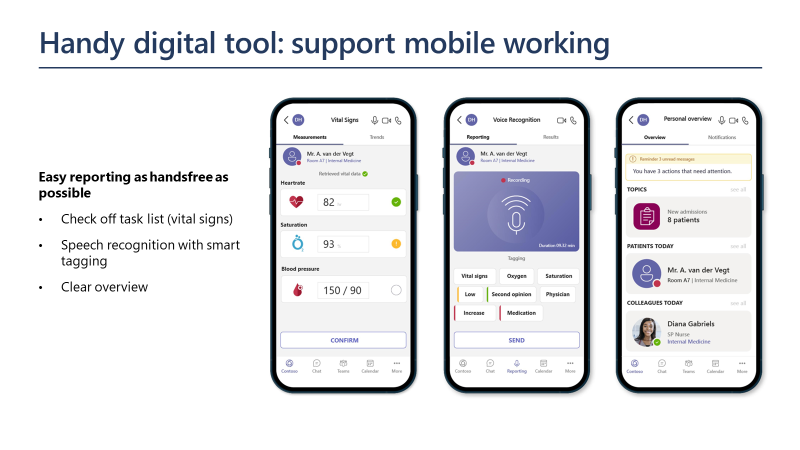

One of these potential solutions was a Handy digital tool. Where nurses could utilize mobile working, and where hands-free reporting was a key requirement.

Based on this idea, we started to mock-up several graphical user interfaces on how such a mobile tool could look like. How could we support nurses to check off their task lists in a remote way or enable speech recognition with smart tagging/codification. The result of the mock-ups are shown below.

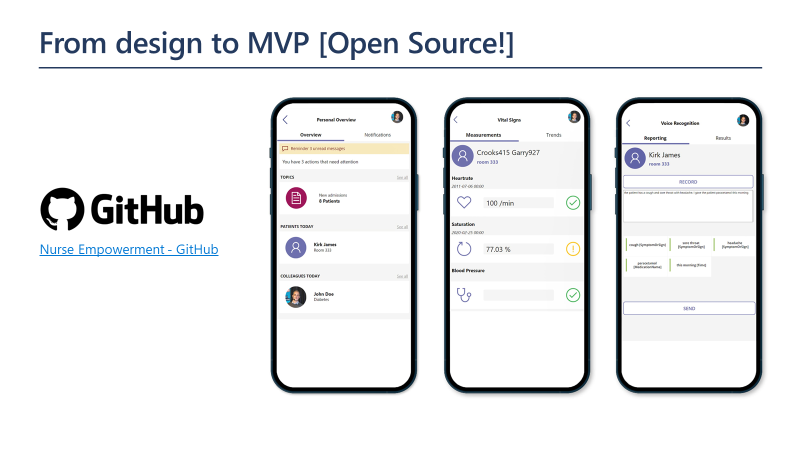

Referring on what I wrote before in this article, the technology is available today, but you still need some glue to combine this in a scalable and flexible way. So based on the mock-ups we decided to create a digital tool, together with Stratiteq, based on the Microsoft Power Platform. The reason why we used a Power App, is that every hospital could use and modify this in an easy and efficient way, via low-code development, and through long development and integration cycles.

We then open-sourced the MVP solution on GitHub, so our healthcare organizations would easily validate the technology and have a solid start to support their nurses. All the data from the Power App is being served from a FHIR store, meaning it can easily visualize patient relevant information from most EHR’s. We included Speech to Text in the Power App via the Nuance Dragon Medical Speech Kit. The codification of the transcribed text was done via Text Analytics for Health, where the results are returned into a FHIR compliant format, which can directly be send back to the EHR. And because the Power Platform has an integrated automation engine, we could easily combine the Nuance Speech to Text, with the API from Text Analytics for Health in an easy and flexible way. You can see the result in action below.

With the handy digital tool, we used the model from Text Analytics for Health, but this can easily be swapped with other NLP models, such as a custom-made model to detect tariffication codes, or any other model you have in mind. The idea is that you can play with your relevant building blocks, combine Speech to Text with NLP and create a valuable tool in days, instead of months, which allows you to do more with less.

It’s exciting to see what is possible today with technology. By merely combining existing technology such as Speech to Text with Natural Language Processing, we can improve many processes and support our care teams at scale. I am looking forward to all the new innovation in the coming months and years, to see how customers and partners will pave the way and show how technology is making a difference in their daily operations.

Bert